Skip Intro at Scale: How I Built Netflix’s Missing Feature for $0.30 per Movie

Combining GPT-4o mini and Gemini Pro 2.5 to detect intros 20× cheaper than Amazon Rekognition

Everyone loves a good opening, until they’ve seen it for the tenth time.

Netflix users hit the “Skip Intro” button 136 million times per day, collectively saving humanity from 195 years of boring content every 24 hours. Yet despite this clear demand, automated intro detection remains surprisingly primitive.

Current solutions fall short in frustrating ways. Plex’s built-in detection refuses to mark intros shorter than 20 seconds or those that appear mid-episode, forcing viewers into a tedious dance with the fast-forward button.

Professional-grade alternatives like Amazon Rekognition and Google’s Video Intelligence API can handle the task, but at a crushing $0.05 per video minute. That is $6 to process a single two-hour film. For a modest media library, you’re looking at hundreds or thousands of dollars in API costs.

Enter Credit Scout: an AI agent that approaches the problem differently.

Instead of brute-forcing frame-by-frame analysis or relying on rigid pattern matching, Credit Scout leverages modern multimodal LLMs to understand video content the way humans do.

It recognizes the subtle cues that signal when the movie begins and when it’s time to leave.

By combining OpenAI’s Agents SDK with Google Gemini’s vision capabilities, it processes strategically selected low-resolution segments to identify those crucial timestamps: intro end and outro start.

The result? Accurate detection at a fraction of the cost.

In my initial testing across 10 open-source films, Credit Scout consistently nailed both timestamps while keeping the total processing cost under $0.30 per movie. Orders of magnitude cheaper than traditional solutions.

In this article, I’ll explain how Credit Scout works, share the complete results from my benchmark tests, and show you how to deploy it for your own media library.

Whether you’re managing a personal Plex server or building the next streaming platform, it’s time to give your users what they really want: the ability to skip the fluff and get straight to the story.

Skip to the GitHub repo to directly start integrating it into your media solution today.

Why intro detection matters

The sheer popularity of the “Skip Intro” button offers a clear window into viewer habits. On Netflix, for instance, this feature is engaged so frequently that users collectively reclaim an astounding 195 years of viewing time daily.

This behavior isn’t just about bypassing a familiar theme song. It shows an important trend in modern content consumption. An increasing impatience with repetitive segments, especially when binge-watching.

The scale of this viewer preference is massive. Consider that those daily skips on Netflix alone translate into 3.4 million hours of human attention being redirected from intros to the actual content. Across all platforms globally, this undoubtedly amounts to billions of hours annually.

Today’s intro detection landscape is caught between inadequate automation and prohibitive costs:

Plex’s pattern-matching limitations

Plex uses audio fingerprinting within seasons, but rigid rules cripple its effectiveness.

- Ignores intros under 20 seconds

- Misses intros placed after the halfway point

- Struggles with varied music or unconventional placements

- Processing can take days for large libraries

Manual markers don’t scale

Human reviewers marking timestamps is labor-intensive and inconsistent. What counts as the “end” of an intro varies by reviewer and platform, creating a standardization nightmare as content libraries explode.

Enterprise APIs: Powerful but expensive

Amazon Rekognition and Google Cloud Video Intelligence charge around $0.05 — $0.10 per minute.

For a modest 1,000-movie library, that’s approximately $10,000 in processing costs alone. These APIs are also overkill, designed for complex computer vision tasks when you only need to identify where credits begin and end.

Why traditional approaches fail

Pattern-matching is great — until the patterns vanish.

- Cold opens wander: the credits might pop 30s in or five minutes later, depending on the editor’s caffeine level.

- Intros mutate mid-season: cue the surprise remix in episode 6.

- Anthologies rebel: every episode sports a brand-new title card.

- Global releases freestyle: credit rules in Seoul ≠ credit rules in São Paulo.

Pure-audio hacks stumble when the theme song swaps genres, and pure-vision hacks misfire on animated montages. Today’s catalog is too weird for regex cosplay. It needs a model that understands “the story starts here.”

That’s the whole Credit Scout plugs: human-grade intro detection, penny-priced, zero hard-coding, ready for whatever Netflix throws on the front page next.

The Credit Scout architecture

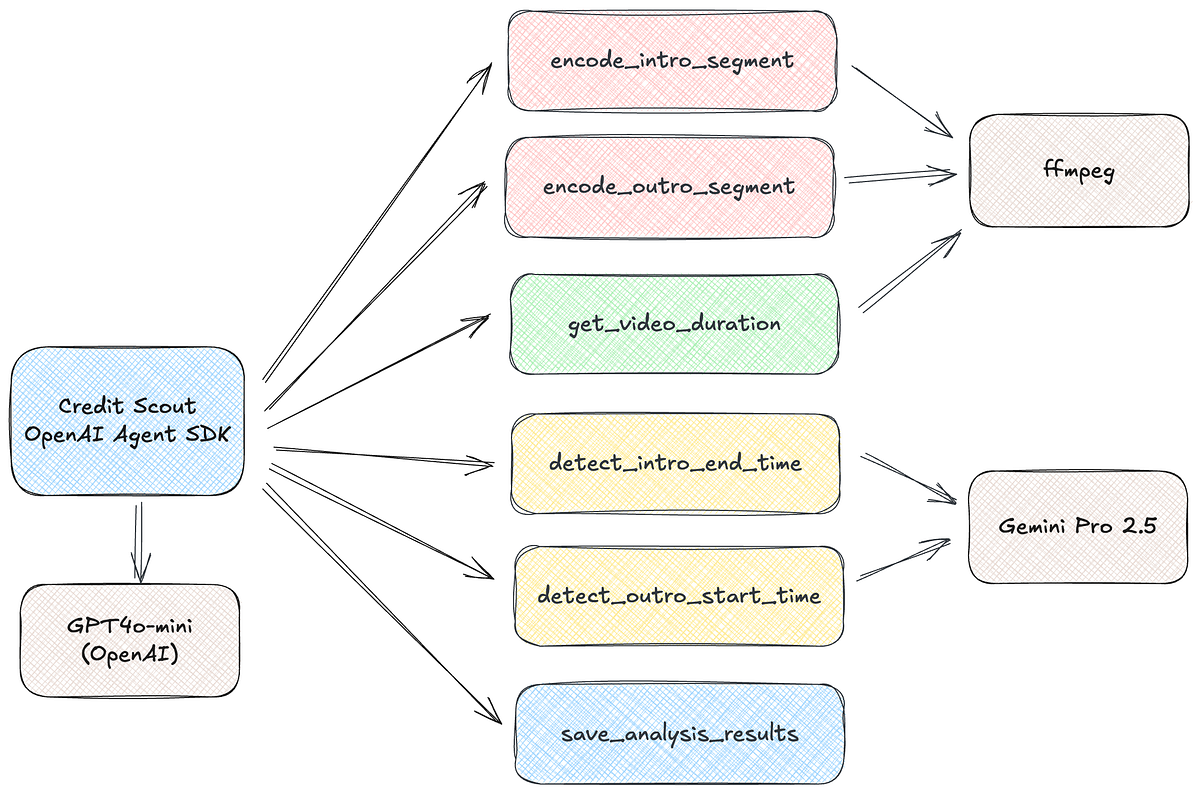

The Credit Scout architecture represents a paradigm shift in approaching video content analysis. Rather than brute-forcing through every frame or relying on rigid pattern matching, the system coordinates between strategic sampling and multimodal AI understanding.

At its core, Credit Scout leverages the OpenAI Agents SDK as the orchestration layer, coordinating between multiple specialized components.

The system intelligently delegates tasks:

- GPT-4o mini handles decision-making and orchestration logic

- Gemini 2.5 Pro provides the visual understanding capabilities

- FFmpeg serves as the video processing backbone, extracting precisely selected segments for analysis.

The key insight driving this architecture is simple yet powerful. We don’t need to analyze the entire video for intro and outro timestamps.

By strategically sampling key segments and leveraging the contextual understanding of modern LLMs, we can achieve accurate detection while processing only a fraction of the video content.

Let's look in more detail at the core components of the architecture.

OpenAI Agents SDK: The orchestration layer

The recently released OpenAI Agents SDK provides the perfect foundation for Credit Scout’s workflow.

With its lightweight, production-ready framework, the SDK enables:

- Agent-based Architecture: Each major task (intro detection, outro detection, video analysis) is handled by a specialized tool controlled by the agent

- Built-in Tracing: Every step of the analysis process is automatically logged, making debugging and optimization straightforward

- Tool Integration: FFmpeg operations and Gemini API calls are wrapped as tools, allowing agents to orchestrate complex workflows with simple function calls

Gemini 2.5 Pro: The visual understanding engine

Google’s Gemini 2.5 Pro serves as Credit Scout’s eyes. Why Gemini over other vision models?

- Multimodal native: Unlike models retrofitted with vision capabilities, Gemini was built from the ground up to understand both visual and textual content

- Long context window: Can process multiple video frames in a single request, understanding temporal relationships

- Cost efficiency: Significantly cheaper than GPT-4V while maintaining comparable accuracy for this specific task

- Deep understanding: Recognizes subtle visual cues like title cards, studio logos, and credit sequences that signal content boundaries

GPT-4o mini: Decision making and pattern recognition

While Gemini handles the visual analysis, GPT-4o mini serves as the brain of the agent and operation:

- Orchestration Logic: Determines which segments to analyze based on video duration and type

- Result Synthesis: Combines multiple Gemini responses to make final timestamp decisions

- Adaptive Strategy: Adjusts sampling approach based on initial results

- Cost Optimization: At a fraction of GPT-4’s cost, it provides sufficient reasoning capability for coordination tasks

FFmpeg: Video processing backbone

FFmpeg remains the industry standard for video manipulation, and Credit Scout leverages its capabilities strategically:

- Precise Segment Extraction: Using keyframe-aware seeking for accurate timestamp extraction

- Resolution Optimization: Downscaling to lower resolutions that preserve visual information while reducing file size

- Format Flexibility: Handles any video format users throw at it

- Efficient Encoding: Optimized encoding parameters balance quality and processing speed

The sampling strategy

Traditional approaches often fall into the trap of analyzing every frame or processing the entire video timeline. This is computationally wasteful for several reasons:

- Intros and outros follow predictable patterns: They typically appear at the beginning and end of content

- Visual redundancy: Most frames within an intro/outro sequence contain similar information

- Diminishing returns: Analyzing more frames doesn’t necessarily improve accuracy for boundary detection

Credit Scout instead employs a targeted approach, focusing computational resources where they matter most.

Resolution optimization

One of Credit Scout’s key innovations is its approach to resolution. While the raw numbers might suggest resolution doesn’t affect API costs (both Gemini and GPT-4V charge per image regardless of size), the reality is more complex:

- Upload speed: Lower resolution videos upload 5–10x faster, reducing overall processing time

- Processing efficiency: Smaller files mean faster FFmpeg operations and reduced memory usage

- Storage costs: Temporary storage of video segments becomes negligible at lower resolutions

- API latency: Smaller images process faster through vision APIs, improving responsiveness

Credit Scout typically downscales video to grayscale 120p at five fps for analysis.

This resolution preserves enough visual detail to identify title cards, credits, and other markers while reducing file sizes by 95%. Testing shows no meaningful accuracy loss compared to analyzing at full resolution, while processing speed improves dramatically.

Let's now look at the implementation details to see how each component is implemented and glued together.

Implementation deep dive

Credit Scout employs OpenAI’s Agents SDK to orchestrate a four-step analysis pipeline. Although it looks like a sequential pipeline, the agent controls the correct tool selection.

Step 1: Video preprocessing and segmentation

The workflow begins by determining the video’s total duration and creating targeted segments for analysis. It uses FFmpeg for both functions.

By converting to grayscale at 120p resolution and 5fps, Credit Scout reduces file sizes by 95 %+ while preserving the visual information needed for intro/outro detection.

Step 2: Strategic frame extraction

Unlike traditional approaches that analyze random frames, Credit Scout focuses on specific time windows where transitions typically occur.

- Intro segment: First 5 minutes (covers 99% of movie intros)

- Outro segment: Last 10 minutes (captures credit sequences)

This approach eliminates the need to process hours of content, focusing computational resources where they matter most.

Step 3: Multimodal analysis

Here’s where Credit Scout’s intelligence shines. Using Google’s Gemini 2.5 Pro, the system analyzes video segments with carefully crafted prompts that mirror human visual reasoning.

The detect_film_start function is where the AI actually "watches" the video and determines when the movie really begins. We are teaching an AI to do what you do naturally when watching a film.

We instruct the model using a special prompt. Why does this prompt matter? Consider a typical film sequence like “Get Out”:

- 0:00–0:25 — Universal Pictures logo

- 0:25–0:40 — “Blumhouse Productions”

- 0:40–0:50 — “OC Entertainment”

- 0:50–3:19 — “Movie intro

- 3:19–4:59 — “On screen Universal presents and title of the movie”

- 5:00 — ← Actual movie begins

Without specific guidance, an AI might incorrectly identify 0:50 (after the title) as the start, missing the additional credits. The detailed prompt ensures all corporate and credit elements are properly excluded.

What Gemini actually “sees”: The AI processes the preprocessed video frames to identify text elements, visual transitions, scene composition changes, and temporal patterns. It distinguishes between static corporate logos and dynamic narrative content.

Step 4: Saving the results into a JSON file

At the end of a successful detection, Credit Scout, save the results, including the actual costs, in a JSON file.

The central agent

We feed the agent all the tools and their documentation so that it can choose the correct tool for each step.

Dynamic resolution adjustment through configuration

Credit Scout allows fine-tuning of quality vs. cost tradeoffs through environment variables:

For extremely cost-sensitive applications, you could try reducing resolution even further to 80p or 2fps and testing the impact on accuracy.

The benchmark

To validate Credit Scout’s effectiveness, I tested it using 10 Blender Foundation open-source films.

Why open source? Reproducibility.

Every reader can download these same films and verify the results themselves. There are no NDAs, no licensing restrictions, just transparent benchmarking.

Film selection criteria

The test suite was carefully curated to represent diverse intro/outro patterns.

- Animation shorts (Caminandes, Agent327) — Minimal intros, quick cuts to action

- Sci-fi epics (Tears of Steel, Elephants Dream) — Complex title sequences with multiple production cards

- Fantasy narratives (Sintel, Cosmos Laundromat) — Traditional storytelling with extended opening credits

- Experimental films (Sprite Fright, Charge) — Non-conventional intro styles

Success metrics

- Timestamp accuracy: Within ±5 seconds of human-determined ground truth

- Cost efficiency: Total processing cost per film

- Processing reliability: Successful completion rate without errors

- Consistency: Reproducible results across multiple runs

Performance metrics

Below you see the test results of each of the ten movies.

Below are the traces of Tear of Steel, which, as you can see, took around 101 seconds to complete.

Summary statistics:

- Total movies analyzed: 10

- Total cost: $2.07

- Average cost per movie: $0.230

- Success rate: 100% (10/10 films correctly processed)

- Average execution time: ~90 seconds per film

Cost breakdown analysis

The primary driver of cost per movie is the total duration of the video segments analyzed by Gemini, not the video’s compression. Credit Scout is designed to analyze the first 5 minutes for intros and the last 10 minutes for outros.

Deploy it yourself

Ready to give your media library the Netflix-style intro skip experience? Here’s everything you need to get Credit Scout running in your environment.

Prerequisites

OpenAI API Key:

- Used for agent orchestration and workflow management

- Cost: ~$0.001 per film (minimal usage)

- Get yours at: https://platform.openai.com/api-keys

- Required model access: GPT-4o mini

Google Gemini API Key:

- Powers the multimodal video analysis

- Cost: ~$0.297 per film (primary expense)

- Get yours at: https://aistudio.google.com/app/apikey

- Required model access: Gemini 2.5 Pro Preview (limited free tier available)

System requirements

- OS: Linux, macOS, or Windows 10/11

- RAM: 4GB available (video processing can be memory-intensive)

- Storage: 2GB free space for temporary files

- CPU: Multi-core recommended for faster encoding

- Network: Stable internet connection for API calls

Required Software:

- Python 3.12+ with uv package manager

- FFmpeg installed and accessible in PATH

- Ubuntu/Debian:

sudo apt install ffmpeg - macOS:

brew install ffmpeg - Windows: Download from https://ffmpeg.org/download.html

- Install UV: https://docs.astral.sh/uv/getting-started/installation/

Installing and running Credit Scout

1. Clone the Repository

git clone https://github.com/PatrickKalkman/credit-scout.git

cd credit-scout2. Create a .env file in the project root with your API keys.

# .env file

OPENAI_API_KEY=your-openai-api-key-here

GEMINI_API_KEY=your-gemini-api-key-here

# Optional: Customize video processing settings

VIDEO_HEIGHT=120 #Lower = faster, higher = more accurate

VIDEO_CRF=28 # Quality: lower = better, higher = smaller files

VIDEO_FPS=5 # Frame rate: lower = faster processing3. Running Credit Scout

uv run credit-scout ./your-movie.mp4Conclusion & what’s next: The roadmap

We started this journey frustrated by a simple problem: enterprise-grade intro detection costs $3 — $6 per movie, which is out of reach for most developers and media enthusiasts.

Through strategic use of multimodal LLMs, intelligent sampling, and aggressive optimization, Credit Scout delivers the same accuracy for $0.30 per movie. This is a 20x cost reduction that democratizes automated video analysis.

But this achievement represents something bigger than just cheaper intro detection. Credit Scout demonstrates how thoughtful AI orchestration can solve complex media problems without breaking the bank.

While platforms like Netflix operate at a different scale with unique constraints, this approach offers a compelling model for efficient and intelligent video analysis.

By combining OpenAI’s Agents SDK with Google’s Gemini vision capabilities and strategic preprocessing, we’ve created a blueprint for intelligent, cost-effective video analysis.

What’s next for Credit Scout?

The current version is just the beginning. Here’s what’s on the horizon:

Pushing the Boundaries: How low can we go with resolution and frame rate while maintaining accuracy? Early experiments suggest we might achieve the same results at 60p resolution, potentially process the content even faster.

Privacy-First Options: Local LLM integration for users who need to keep their content on-premises. As models like Llama Vision improve, we explore hybrid approaches that balance cost, privacy, and accuracy.

Expanded Intelligence: Besides intros and outros, Credit Scout could automatically detect scene transitions, identify speaking segments, or generate content-aware chapter markers.

Edge Case Mastery: Television content with mid-episode credits, anthology series with varying intro styles, and international content with unique conventions all present interesting challenges to solve.

Try it, break it, improve it

Credit Scout is open source because the best solutions emerge from diverse perspectives. Whether you’re running a personal Plex server or architecting the next streaming platform, your use case will reveal new opportunities and challenges.

Ready to skip the fluff and get straight to the story? The code is waiting for you.

Resources and Links

Ready to build? Here’s everything you need to get started.

Project resources

- Credit Scout GitHub Repository — Complete source code, installation instructions, and contribution guidelines

- Benchmark Dataset — Here you can find all 10 test films for reproducible testing

- Example Results — Sample output files

API Documentation

- OpenAI Agents SDK — Official documentation for the orchestration framework

- Google Gemini API — Vision model integration and pricing details

- FFmpeg Documentation — Video processing and encoding reference

Related projects and inspirations

- Plex Intro Detection — The built-in solution that inspired better alternatives

- Jellyfin Intro Skipper — Community plugin using audio fingerprinting

- Netflix’s Skip Intro Technology — Technical blog post on their approach

- YouTube’s Auto-Chapters — AI-driven content segmentation at scale

Credit Scout is open source and available on GitHub. Join the community shaping the future of AI-assisted media analysis.