Introducing Nibble: The AI That Improves Your Code One Bite at a Time

While you sleep, your code gets (slightly) better

What if your first task each morning was simply clicking Merge on a five‑line pull request you didn’t write?

No sweeping refactor. No risky overhaul. Just one surgical tweak, a variable renamed, a guard clause extracted, a TODO crossed off.

That’s Nibble. A GitHub App that applies the Boy‑Scout Rule in its purest form, leave the campsite cleaner than you found it, every single night.

Think compound interest for code quality. The magic isn’t in one change, but in the relentless accumulation of micro‑improvements.

Nibble prowls your repository, spots low‑hanging fruit, often guided by special // NIBBLE: comments left by developers, or by detecting plain old code smells, and opens a single, focused pull request for morning review.

No bloated diffs, no stomach‑churning “monster merge,” just a daily nibble at technical debt.

Because the best way to eat an elephant isn’t a bigger fork, it’s 365 consistent bites.

Want to dive straight in to the source code, you can find it in the GitHub repository.

The philosophy: small bites, big impact

Every dev quotes the Boy‑Scout Rule: leave the code cleaner than you found it. Deadlines, meetings, and entropy have other plans. Good intentions vanish when the commit clock is ticking.

Nibble hard‑codes that rule. While you’re sleeping or shipping features, it sweeps the codebase and files micro‑PRs that take seconds to review and minutes to love.

Why nibbling beats the big‑bang refactor

Monolithic refactors fail for tediously familiar reasons: risk, cost, and reviewer paralysis. A 500‑line diff is a cardiac event; a 5‑line diff is a shrug.

Nibble flips the equation: microscopic cost, daily benefit. One rename today saves a future “what‑the‑variable” tomorrow.

The psychology of small

Loss aversion melts when the change fits on a phone screen. Reviewers approve before the coffee cools, notch a dopamine hit, and start craving tomorrow’s fix. Clean code becomes habit, not heroics.

Compound interest for code

Improve 1% a day and you’re 37× better in a year. Each tweak clarifies the next: clarity snowballs, entropy retreats.

And that’s the real magic of thinking small.

How Nibble works

At its core, Nibble operates on a beautifully simple principle: find one thing to improve, improve it, and submit it for review. But simplicity in concept doesn’t mean simplicity in execution. Let’s peek under the hood.

Scanning for explicit NIBBLE comments

In this first version of Nibble, it uses the most straightforward way to find work. It searches for explicit markers.

Developers can leave // NIBBLE: comments throughout their codebase, like breadcrumbs for future improvement:

// NIBBLE: This variable name could be more descriptive

const d = new Date();

// NIBBLE: Add error handling here

const userData = JSON.parse(response);

# NIBBLE: This function is getting too complex

def process_payment(user, amount, currency, merchant, options={}):

# 200 lines of nested logic...These comments act as a developer’s note-to-self, except now there’s actually someone (or something) listening. It’s like having a helpful assistant who actually reads your TODOs.

One improvement per run philosophy

Here’s where Nibble’s restraint becomes its strength. While it might find dozens of potential improvements, it picks just one. Why this limitation?

- Reviewability: One change = one decision. No cognitive overload.

- Safety: Single changes are easier to test and rollback if needed

- Focus: Each PR has a clear, singular purpose

- Momentum: Daily small wins beat sporadic large efforts

This is discipline by design. Nibble could theoretically fix ten things at once, but that’s not the point. The point is sustainable, reviewable, incremental progress.

So, how does this translate into a working system?

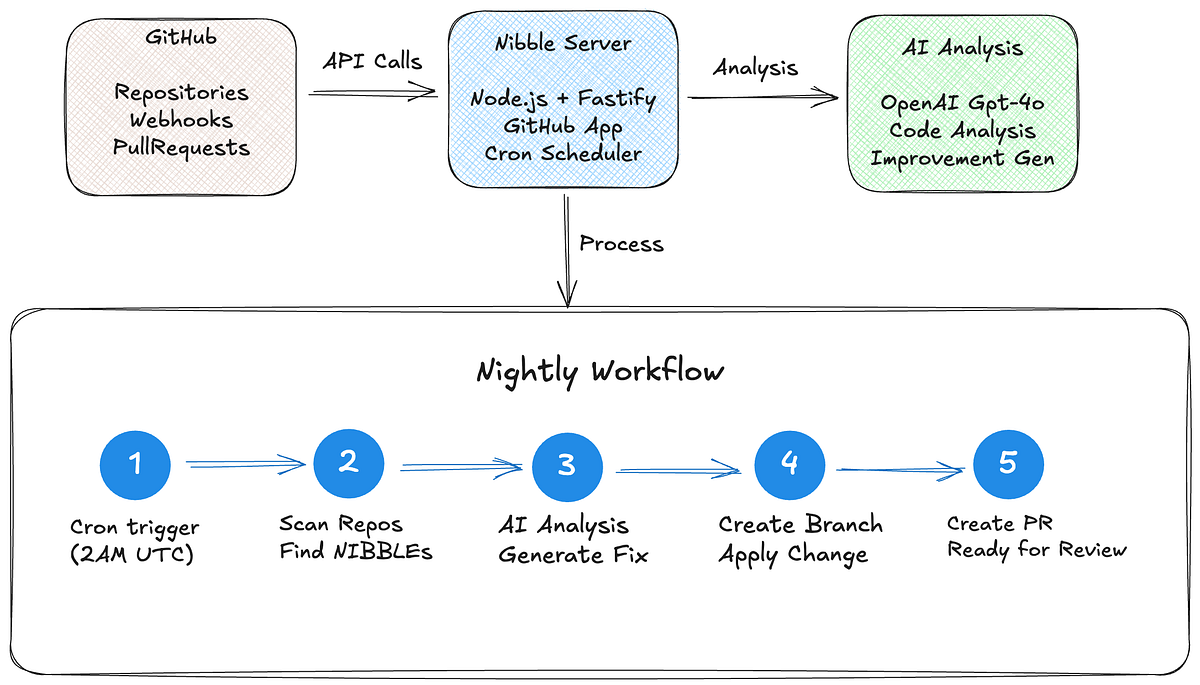

A glimpse into Nibble’s engine

Building Nibble involved some key architectural choices to bring this “small bites” philosophy to life.

We opted to build it as a GitHub App, which offers advantages like secure authentication, fine-grained permissions, and easy installation.

The core engine runs on a streamlined Node.js server powered by Fastify, emphasizing reliability, while AI analysis is handled by GPT-4o-mini, a powerful yet cost-effective model.

The nightly workflow is a simple, deliberate sequence:

- (1) Cron Trigger: Kicks off at 2 AM UTC.

- (2) Scan Repos & Find NIBBLEs: It searches for designated // NIBBLE: comments or other predefined code smells.

- (3) AI Analysis & Fix Generation: The identified context is passed to GPT-4o-mini, which is constrained by specific prompts to generate a small, focused improvement (e.g., “Changes must be under 10 lines,” “If unsure, do nothing”).

getSystemPrompt() {

return `You are a senior software engineer helping to make small, incremental improvements to codebases.

Key principles:

- Make SMALL changes only (1-10 lines typically)

- Prioritize safety and readability over cleverness

- Preserve existing functionality

- Follow established code patterns

- Be conservative - if unsure, don't change anything

- Only suggest improvements that are clearly beneficial- (4) Create Branch & Apply Change: A new branch is created, and the AI’s suggestion is applied.

- (5) Create PR for Review: A minimal pull request is opened, clearly stating what changed, why, the original NIBBLE comment, and a confidence score.

The beauty of this approach lies in its focused nature. Nibble doesn’t try to understand your entire architecture; it zeros in on localized, contextual improvements suggested by the AI based on developer breadcrumbs or simple patterns.

For those curious about the deeper technical details and want to see the code in action. From GitHub App authentication to the precise AI prompting, the complete source code is available on GitHub.

Building this seemingly simple tool taught me far more than I expected about AI, automation, and the surprising complexity of even basic developer tools.

What I learned

Building and deploying Nibble, even with its focused scope, was an eye-opening experience. It taught me about AI, about automation, and about why even the simplest web service seems to attract the internet’s more creative users within minutes of going live.

Here are some of the key takeaways:

Search/Replace: A deceptively complex problem

My biggest technical learning? Search and replace isn’t as simple as it sounds. I started with the naïve approach, have the AI analyze the entire file and return a modified version.

This is what most AI code editors do today. But for Nibble, this created several problems:

- Diff noise: Even when making a one-line change, GPT-4o-mini would sometimes reformat white space or reorder imports

- Verification nightmare: How do you verify the AI only changed what it said it would?

- Context loss: The PR reviewer can’t easily see what specifically changed and why

So I pivoted to a surgical search/replace:

// What I do now: exact string matching

{

"searchText": "const d = new Date();",

"replaceText": "const currentDate = new Date();"

}This constraint forced better AI behavior. Instead of “rewrite this function,” it’s “find THIS exact text and replace it with THAT exact text.” The downside? Sometimes the LLM hallucinates search text that doesn’t quite match:

// LLM says to search for:

"const data = JSON.parse(response);"// But the actual code has:

"const data=JSON.parse(response);" // no spaces!

These subtle differences (spacing, line endings, quotes) cause about 15% of improvements to fail. It’s a tradeoff I’m still iterating on.

Maybe the answer is fuzzy matching, or teaching the AI to be more careful about white space. The journey continues.

What worked well?

The core premise validated immediately. Within days of deploying Nibble on my own projects, it had:

- Renamed dozens of cryptic variables

- Added error handling to JSON.parse calls

- Removed commented-out code blocks

- Fixed inconsistent indentation

- Replaced

varwithconst/letin legacy code

Developer psychology worked as predicted. The small PRs were genuinely delightful to review:

- Morning dopamine hit ✓

- Less than 30 seconds to review ✓

- Clear value with zero risk ✓

- No context switching required ✓

The compound effect is real. After a month, codebases with Nibble felt noticeably cleaner. Not transformed — just tidier. Like the difference between a desk that gets wiped down daily versus monthly.

Surprises

Within 12 hours of deploying Nibble, I was getting hammered with suspicious requests. The logs were eye-opening:

GET /.env - 404 (searching for environment variables)

GET /wp-admin - 404 (WordPress exploits on a Node app?)

GET /.git/config - 404 (looking for exposed git repos)

POST /api/v1/users/admin/reset - 404 (credential stuffing)

GET /backup.sql - 404 (database dumps)And my favorite:

GET /vendor/phpunit/phpunit/src/Util/PHP/eval-stdin.phpSomeone was trying to exploit a PHP vulnerability… on my Node.js app. The dedication is almost admirable.

This led to implementing comprehensive security middleware earlier than planned:

// Had to add these within the first week

const suspiciousPatterns = [

/\/\.env$/, /\/\.git\//, /\/wp-admin\//,

/\.sql$/, /\.backup$/, /\.php$/

// ... 20+ more patterns

];These now get blocked automatically:

{

"level": 40,

"time": 1749471039644,

"pid": 18922,

"hostname": "instance-20250607-1110",

"ip": "127.0.0.1",

"url": "/.env.bak",

"userAgent": "l9explore/1.2.2",

"host": "82.215.129.40",

"headers": {

"connection": "upgrade",

"host": "82.215.129.40",

"x-real-ip": "93.123.109.231",

"x-forwarded-for": "93.123.109.231",

"x-forwarded-proto": "https",

"user-agent": "l9explore/1.2.2",

"accept-encoding": "gzip"

},

"type": "suspicious_url",

"msg": "Blocked suspicious request"

}AI confidence varies wildly

GPT-4o-mini’s confidence in its suggestions follows no predictable pattern. Sometimes it’s 95% confident about a trivial variable rename but only 60% confident about an obvious missing error handler. I initially thought higher complexity meant lower confidence, but it’s more random than that.

Limitations: What doesn’t work (yet)

The LLM response variance problem

This is the biggest technical challenge. Even with temperature set to 0.1, GPT-4 sometimes returns slightly different formatting:

// First run:

{

"searchText": "if (user != null) {",

"replaceText": "if (user !== null) {"

}// Second run on identical input:

{

"searchText": "if (user != null) {",

"replaceText": "if (user !== null) {", // Extra comma!

}

About 10–15% of improvements fail because the search text doesn’t exactly match the file content.

Current workarounds:

- Validate search text exists before creating PR

- Skip improvements with low confidence

- Log failures for manual investigation

Multi-line search/replace gets tricky

The AI struggles with multi-line replacements, especially with inconsistent indentation:

// This often fails:

"searchText": "function process(data) {\n // TODO: Add validation\n return data;\n}"Line endings, indentation, and white space differences make exact matching fragile.

No context awareness

Nibble can’t tell if that temp variable is badly named or if it's following a project convention. It doesn't know your team prefers fetch over axios, or that you're gradually migrating from callbacks to promises.

Limited to explicit markers

Currently, Nibble only responds to explicit // NIBBLE: comments. It can't proactively find code smells, detect anti-patterns, or suggest optimizations. That's a deliberate limitation for safety, but it reduces the tool's autonomy.

New respect for AI developer tools

Building Nibble gave me a completely new appreciation for AI developer tools. Going in, I’ll admit I was skeptical.

How hard could it be to wrap an LLM and call it a day? Turns out, I was naïve.

The gap between “chatbot that knows code” and “tool that safely modifies production code” is massive. Real AI developer tools need to solve problems I hadn’t even considered:

- Deterministic behavior from non-deterministic models: Even with temperature at 0.1, getting consistent output is a challenge

- Context window management: Deciding what code to show the AI without overwhelming it or missing critical context

- Safe failure modes: When the AI suggests something wrong, it needs to fail gracefully, not corrupt your codebase

- Parsing untrusted AI output: You can’t just

JSON.parse()and hope for the best—the AI will eventually return malformed JSON - Idempotency: Running the same tool twice shouldn’t create duplicate changes or conflicts

Tools like Cursor, GitHub Copilot, and Aider aren’t just “ChatGPT with syntax highlighting”. They are solving incredibly complex problems around AST manipulation, incremental parsing, conflict resolution, and multi-file context understanding. The engineering effort is enormous.

My simple search/replace approach sidesteps many of these challenges, but even that “simple” solution required handling edge cases I never expected. Mad respect to teams building full-featured AI development environments.

The importance of human oversight

The most important learning? Human review isn’t optional, it’s the feature.

Every Nibble PR needs human eyes because:

- Context is king: That “poorly named” variable might reference a domain-specific term

- Side effects hide everywhere: Renaming

datatouserDatamight break string-based property access - Team standards vary: What’s idiomatic in one codebase is heresy in another

- AI hallucinates: Sometimes the improvement makes things worse

But here’s the beautiful thing: because each change is tiny, review is trivial. It’s not “review this 500-line refactor,” it’s “does renaming d to currentDate make sense?" That's a 5-second decision.

The human in the loop isn’t a limitation — it’s what makes Nibble trustworthy. The AI suggests, humans decide, code improves. It’s collaborative intelligence at its most practical.

The meta-lesson? Building developer tools in 2025 means:

- Embracing constraints (small changes only!)

- Expecting the unexpected (hello, script kiddies!)

- Respecting human judgment (AI assists, not replaces)

- Starting simple and iterating (search/replace isn’t solved yet)

Nibble is still evolving, still learning, still occasionally failing to match white space. But every day, in small ways, it’s making code a little bit better. And that’s exactly what I set out to build.

Ready to try Nibble on your own code? Visit the repo to install it in under 5 minutes. Or fork it and make it your own, I’d love to see what improvements you come up with.